Recommended for digestibility – web version on PubPub

For further information or to provide feedback, contact info [at] pllel [dot] com.

Reduced forms also available: Primer // Analytical Index as Compressed Essay // Short talk

A tag for articles.

Recommended for digestibility – web version on PubPub

For further information or to provide feedback, contact info [at] pllel [dot] com.

Reduced forms also available: Primer // Analytical Index as Compressed Essay // Short talk

Taking the layered systems approach to its supra-logical endpoint. Chains of blocks ALL the way down.

Welcome to Canberra’s latest premium residential high rise address. DM for PayPal details. #realestate #canberra #soundart #noise #3dprinting #mechanicalart #truesciencefact pic.twitter.com/LEGCIi7Md3

— Brian McNamara (@Rarebeasts) May 6, 2019

I’ve been invoking a layered stack model (after OSI and Buterin) to attempt finer grain characterisations, explanations and rationalisations of various epistemic and phenomenological happenings in the domain of cryptocurrencies and blockchain-architected P2P networks for a while. It’s a helpful lens with which to attempt definitions of many of the most loosely employed terms in regular use — decentralisation, permissionlessness, censorship-resistance, immutability. Take a look at Reaching Everyone Pt II for the low-down.

“Layering has both technical and social implications: it makes the technical complexity of the system more manageable, and it allows the system to be designed and built in a decentralized way.”

— شتر دیدی؟ ندیدی (@arbedout) May 13, 2019

How layering made building the ARPANet possible: pic.twitter.com/wOEE5d578C

The reason for the above framing is that the acid test of a conceptual framework’s robustness when harnessed to build classification systems such as taxonomies and typologies is its ability to accept new objects or be generalisable into a meta-metholodology. To this end, one can also readily frame the ontological quest (simply put, the pursuit of wisdom and meaning) as a layered stack of intellectual discliplines. I’m sure this notion of an ontological meta-stack isn’t a totally original approach, but having played with it for a while it’s become a useful conceptual lens with which to (try to) understand how trans/multi/inter/anti/pan/omni/supra/quasi/para/post disciplinary research (choose your favoured poison) differs from steady state scholarly work. Furthermore, layered stacks may be one of the most easily invoked mechanism to achieve differential discretisation whilst grappling with linear domains. Apropos of nothing: a few years ago I was completely preoccupied with the wacky idea of starting a serious-and-satirical research disorganisation-superimposition that I was very tongue-in-cheekily calling The Institute of Non-Linear Phenomenlogy. Stacks of layers need not apply within.

Overly individuated, siloed or specialised knowledge domains — typically mature fields — tend to be hyper-focused on very small regions of the spectrum rather like the spectroscopic disciplines which I spent a decade playing supramolecular detective with. A photophysicist / spectroscopist could spend an entire lifetime “playing with” a narrowly bounded set of quantised energy levels in an atom, molecule, crystal or superstructure.

Likewise, a researcher could spend a fruitful and fulfilling career looking for the same signatures in wildy different systems. I studied the same ?(CO) vibrational signature in both exotic low-temperature astrophysical ices and in complex solution-based supramolecular assemblies. The same fingerprint can be exploited to provide rich information with respect to its environment on both sub-nanosecond and celestial timescales!

It would be remiss to attempt an approach to this weighty subject without discussing taxonomy, epistemology and more generally the design science of information systems. Taxonomy is one of the more powerful intellectual tools at our disposal with which to progress towards ontologies — although a classification system may not have truth value in itself, it may be an intermediate development and therefore useful in its own right.

Let’s not get too deep into the weeds here, instead take a look at various TokenSpace materials to go deeper: Primer, Q&A, Compressed Essay as Analytical Index, Manuscript (available on request), Lecture video (soon!).

Tegmark’s notion of subjectivity at the Universe’s margins (Planck limits, complex adaptive systems) with empirical objectivist domains betwixt seems appropos here. Let’s call it a subjectivity sandwich. Feynman famously opined “There’s Plenty of Room at the Bottom”, but there ostensibly exists even more room at the top!

Okay so here it is, the first iteration anyway. Let me know what you think by commenting or pinging me on Twitter. In reality, this framework may not be truly linear, granular and hierarchical but there is hopefully some value to it. Perhaps the next iteration of this half-baked idea will be an open form: woven meshes, interlinked gears — an ontological meta-DAG!?!?

As we move from bottom to top, complexity of the agents in focus increase alongside subjectivity. But at sub-quantum scales, the Universe also appears subjective — at least based on observations through our current paradigmatic lenses. Interesting phenomena tend to emerge at the margins, between disciplines or even as a synthesis of several elements of the meta-stack. Perhaps it’s time to repurpose the wonderful bibliotechnical term marginalia to capture this essence.

Cryptocurrencies are a great example of systems drawing on a number of these components. Indeed at the network level these protocols are very simple messaging systems but can exhibit extremely complex and unexpected emergent phenomena — Forkonomy is a good example of an attempt to apply unusual lenses to characterise unusual goings-on. This may also help to explain why researchers possessing deep subject-specific knowlege pertaining to one of the areas which cryptocurrency draws upon — cryptography, distributed systems, protocol engineering, law, economics, complex systems — often find it difficult to communicate with fellow scholars rooted in another traditional pastime. Perhaps the rise of novel meta-disciplines such as complexity science show that one approach — in our parlance — to harness and further understand non-linear domains is to capture as much of the stack as possible.

TLDR: Are we entering a new “Age of Techno-Dilettantism”?

Table of contents as compressed essay is a very good form (Feyerabend, “Against Method”) pic.twitter.com/V24bI7Wry5

— Adam Strandberg (@The_Lagrangian) April 20, 2019

TokenSpace: A Conceptual Framework for Cryptographic Asset Taxonomies. Analytical Index As Compressed Manuscript

1 Introduction & Historical Review

Characterising the properties of physical and conceptual artifacts has never been trivial, and as the fruits of human labour proliferate in both number and complexity this task has only become more challenging. The quest of bringing order, process, context and structure to knowledge has been a critical but seriously underappreciated component of the success story of intellectual development in domains as philosophy, mathematics, biology, chemistry and astronomy from which the higher echelons of the ontological meta-stack which embody the richness of modern human experience have emerged. Despite the undeniable observation that we live in a society driven by finance and economics, these fields still exist in a relatively underdeveloped state with respect to wisdom traditions, and the very recent accelerationist emergence of cryptographic assets has completely outrun any scholarly approach to understanding this sui generis population of intangible, digitally scare objects.

1.1 Necessity for the Work, Regulatory Opacity & Uncertainty

Over the time of writing and researching this work (2017–2019) the sentiment and perceived landscape inhabited by the field of cryptoassets has shifted significantly. This work was conceived in the midst of the 2017 bull market and Initial Coin Offering tokenised fundraising frenzy as a potential remedy to mitigate the naive conflation of cryptoassets as self-similar, with potential legal, compliance and regulatory ramifications. Over the intervening time, various national and international regulators and nation-state representatives have made comments, pronouncements and legislation taking a wide range of approaches to attempting to bring jurisdictional oversight to financial assets and products built upon borderless peer-to-peer protocols. This has set up a game of regulatory arbitrage in extremis as participants in the wild distributed boomtown surrounding cryptocurrency networks hop between countries faster than legal systems can react and adjust.

1.2 Hybrid Character of Cryptocurrency Networks & Assets, the Complex Provenance & Nature of Decentralisation

The protocols, networks and assets springing forth from cryptocurrency innovation are complex, intricate and exhibit a high degree of emergent behaviour and phenomena which prove very hard to predict or explain ex ante. There are also significant semantic challenges with terms such as “blockchain”, “decentralisation”, “censorship-resistance”, “permissionlessness” and “immutability” being used without precise definition. For these reasons there are a great deal of challenges with legalistic approaches that are put forward by persons and entities either with vested interests or lacking a deep understanding of the nuanced factors at play within these complex systems and associated assets.

1.3 Legal, Economic & Regulatory Characteristics of Cryptographic & Legacy Assets

Before a rigorous regulatory approach can be taken with respect to cryptoassets, the nature of the objects themselves must be deconvoluted. This work takes the approach that -for regulatory purposes at least — these objects may be characterised with sufficient meaningfulness as hybrids between securities, commodities and moneys.

1.3.1 Nature of Money Throughout the Ages

The use of objects as money has accompanied human progress, at least for the past several millennia. Aristotle wrote in the 4th century BC about the desirable properties of a money as fungibility, divisibility, portability and intrinsic value. Jevons framed this in terms of usage as stores of value, media of exchange and units of account. Chung took Baudrillard’s notion of the simulacrum to theorise that as technological progress continues, the willingness and / or expectations of human societies to use more technologically advanced objects as money moved in tandem. Economics provided the concept of stock-to-flow, the varying extents of rarity of monetary objects was tested by advances in transportation and geo-arbitrage exploited asymmetries in small-scale monetary systems. As technology widens the horizons of human society, even globally rare monetary objects such as precious metals become susceptible to supply inflation from seawater or asteroid recovery. Cryptographic assets provide the first instantiation of algorithmically enforced and provable, universal rarity. Unforgeable costliness of production may be the necessary counterpoint to nation-state controlled seigniorage, debasement and politically motivated manipulation of monetary supply to serve purposes of legacy institutions rather than the populace.

1.3.2 What Makes a Good Become Commoditised?

The commodification of goods arguably dates back as far as the development of agrarian societies. Marxist economics defines a commodity good as the fruit of productivity, by human or machine. More generally any sufficiently widely useful and in demand object which becomes standardised can be regarded a commodity.

1.3.3 Regulating Securitised Asset Issuance in a Post-Howey Paradigm

A security is simply an agreement to provide benefits from an underlying asset in return for investment. US legal precedent such as the WJ Howey, Silver Hills and Reves vs. Ernst & Young cases showed how orange groves, financial “notes” and golf courses could be securitised, not just obviously financialised assets such as company equity and debt. The critical features of a securitised asset: investment agreement, capital risked, expectation of profit, entities relied upon to deliver returns, fractional ownership of underlying, governance rights, returns such as a cashflows in addition to capital appreciation.

Considering cryptoassets, many projects exhibit some or all of these characteristics but very few prior to 2018/9 were openly registered as securities. Some senior regulatory officials made comments which further muddied the waters by discussing certain assets as having been initially distributed in securities offerings but having become “sufficiently decentralised” to no longer bear those hallmarks without providing definitions or heuristics. This leads to the notion that many projects are strongly incentivised to engage in decentralisation theatre, to carefully configure the outward appearance of their networks and/or tokens as decentralised in order to circumvent compliance burden of securities regulation. Clearly a more sophisticated conceptual framework is required to rationalise the similarities and differences between the diverse constellation of cryptographic networks and assets existing now and in the future.

1.3.4 Legacy Assets Exhibiting Hybrid Characteristics

Marx wrote in great length in Das Kapital about the interrelationship between commodities and moneys and how a good might move from one to the other. Some short-dated government debt could be considered as securitised, commoditised and as a monetary equivalent. Precious metals have at times been considered commodities and commodity-moneys.

2 Classification Approaches & Design Science of Information Systems

Designing conceptual systems to systematise knowledge is an intellectually demanding pastime that requires a deep knowledge of the subject material to produce meaningful and useful outputs.There is still merit in attempts which do not meet their goals, as they may be steps towards their goal which require advances in theory, bodies of knowledge, empirical information or maturation of a scholarly ecosystem.

2.1 Definitions of Terms Within Paradigms of Classification

Classification: Spatial, temporal or spatio-temporal segmentation of the world Ordering on the basis of similarity

Classification System: A construction for the abstract groupings into which objects can be put

Framework: A set of assumptions, concepts, values and practices that constitutes a way of understanding the research within a body of knowledge

Typology: A series of conceptually-derived groupings, can be multivariate and predominantly qualitative in nature

Taxonomy: Empirically or conceptually derived classifications for the elucidation of relationships between artifacts

Taxonomic System: A method or process from which a taxonomy may be derived

Cladistic Taxonomy: Historical, deductive or evolutionary relationships charting the genealogical inter-relationships of sets of objects

Phenetic Taxonomy: Empirically derived groupings of attribute similarity, arrived at using statistical methods

2.2 Philosophy of Design Science & Classification Approaches

Bailey — ideal type, constructed type, substruction, reduction

For the most part typologies conceptually derive an ideal type (category) which exemplifies the apex (or maximum) of a proposed characteristic whereas taxonomies develop a constructed type with reference to empirically observed cases which may not necessarily be idealised but can be employed as canonical (or most typical) examples. Such a constructed type may subsequently be used to examine exceptions to the type.

“A researcher may conceive of a single type and then add dimensions until a satisfactory typology is reached, in a process known as substruction. Alternatively the researcher could conceptualise an extensive typology and then eliminate certain dimensions in a process of reduction.”

Popper Three Worlds vs Kuhn’s discontinuous paradigms

In contrast to Kuhn’s paradigmatic assessment of the evolution of concepts, Popper’s Three Worlds provides some philosophical bedrock from which to develop generalised and systematic ontological and / or epistemological approaches. The first world corresponds to material and corporeal nature, the second to consciousness and cognitive states and the third to emergent products and phenomena arising from human social action.

Niiniluoto applied this simple classification to the development of classifications themselves and commented:

“Most design science research in engineering adopts a realistic / materialistic ontology whereas action research accepts more nominalistic, idealistic and constructivistic ontology.”

Materialism attaches primacy to Popper’s first world, idealism to the second and anti-positivistic action research to the third. Design science and action research do not necessarily have to share ontological and epistemological bases. Three potential roles for application within information systems were identified: meansend oriented, interpretive and critical approaches. In terms of design science ethics Niiniluoto comments:

“Design science research itself implies an ethical change from describing and explaining the state of the existing world to shaping and changing it.”

Ivari considered the philosophy of design science research itself:

“Regarding epistemology of design science, artifacts of taxonomies without underlying axioms or theories do not have an intrinsic truth value. It could however be argued that design science is concerned with pragmatism as a philosophical orientation attempting to bridge science and practical action.”

Methodology of design science rigour is derived from the effective use of prior research (i.e. existing knowledge). Major sources of ideas originate from practical problems, existing artifacts, analogy, metaphor and theory.

2.3 Selected Examples of Taxonomy & Typology Approaches

Classification approaches can be traced back as far as Aristotle’s “Categories” and was applied thoroughly first in the natural sciences by botanists and zoologists such as Linneaus and Haeckel who employed a hierarchical and categorical binomial paradigm.

Periodic table of the elements, from occultist elementalism and alchemy to empirical verification of atomic structure and electronic bonding. Periodic tables are snapshots in time of the taxonomic progression, new elements and isotopes continue to be discovered and synthesised. Coal & tar trees.

Nickerson’s generalised and systematised information systems artifact classification approach (meta-taxonomy!) provided the methodological foundations for TokenSpace taxonomies, upon which the multi-dimensional framework was constructed.

2.4 Recent Approaches to Classifying Monetary & Cryptographic Assets

Burniske / Tatar: naive categorisation, two dimensions deep. Non-exhaustive, not explanatory.

BNC / CryptoCompare: data libraries, descriptive, more a typology

Kirilenko: nothing here — arbitrary data, scatterplot, risk/reward

BIS / Stone money flower: non-exhaustive, overlapping assignations.

Cambridge regulatory typologies: simple, unassuming, good! The classification framework next door. Potential for development.

Fridgen: a true Nickerson taxonomy. Cluster analysis to determine anisotropy in parameter space. Great but rather specific and limited in scope.

3 Designing TokenSpace: A Conceptual Framework for Cryptographic Asset Taxonomies

For a classification system to be useful and have explanatory value, it should address the limitations of understanding the differences between artifact properties within the populations of objects as exists at present. TokenSpace is intended to be a conceptual framework addressing issues arising from limitations of appreciation of the hybrid nature and time-dependence of the qualities of cryptographic assets.

3.1 Introduction & Problem Statement of Taxonomy Development

Taxonomies should be explanatory, exhaustive, robust to new objects and irreducible without losing meaningfulness.

3.2 Construction of the TokenSpace Framework: Components & Methodology

Conventional taxonomies are categorical and can be either flat or hierarchical. The classification approach should be built to discriminate for a meta-characteristic, with a series of dimensions asking questions of each object, of which two or more categorical characteristics provide the options which should encompass the property in question.

3.2.1 Building Robust Taxonomies based on Information Systems Best Practices

Elicited, weighted score taxonomy, intuitive, deductive etc.

3.2.2 Three Conceptual-to-Empirical Approaches to Short-Listing Taxonomy Dimensions & Characteristics

For the meta-characteristics of Securityness, Moneyness and Commodityness a series of attributes were produced from which to iterate through the construction of taxonomies.

3.3 Design Choices & Justifications for TokenSpace

3.3.1 TokenSpace as a Conceptual Representation of Spatio-Temporal Reality

Making an n-dimensional space with meta-characteristics as axes and asset locations based upon scored taxonomies.

3.3.2 Defining Boundaries

Bounds of TokenSpace between zero and one, possible to extend beyond to consider notions of good and bad characteristics but this is non-trivial.

3.3.3 Dimensionality & Clarity

Three spatial dimensions enables the construction of a simple visual output which a non-technical user such as a lawmaker, politician or regulator may readily use to make informed subjective comparisons of asset characteristics. Orthogonality for visual clarity, not necessarily real — the properties and hence taxonomies of Moneyness and Commodityness are clearly very similar.

3.3.4 Categorical & Numerical Characteristics

Categories have limitations — boundary conditions, edge cases, Goodhart’s Law susceptibility. Ranged indexed dimensions attempt to address this by trading off complexity for subjectivity, however the effectiveness of this approach is limited by the domain expertise of the researcher.

3.3.5 Score Modifiers & Weightings for Taxonomy Characteristics & Dimensions

The relative significance of each dimension to the overall score output by a TokenSpace taxonomy can be adjusted through weighted scoring, and indeed the taxonomies of Commodityness and Moneyness vary mostly in their weightings rather than the choice of dimensions and characteristics.

3.3.6 Time-Dependence

Time-dependence can be assessed by charting the evolution of asset scores and locations in TokenSpace over time.

3.3.7 Boundary Functions

Boundary surfaces may be used to delineate regions in TokenSpace for regulatory and / or compliance purposes. Taking the example of Ethereum and Hinman’s summer 2018 comments. ETH has moved through a boundary surface from “was security offering” to “no longer is”. Lack of precision in language, definitions, characterisation metrics and visual conception. TokenSpace can address these to some extent.

3.3.8 Non-point & Anisotropic Asset Locations

The model can be extended beyond simple point asset locations in space through use of error bars or probability density functions as utilised in molecular orbital space filling representation in chemical bonding. The way that anisotropic score densities could be arrived at is via functions representing some of the dimensions yielding multiple, incompatible, quantised or inconsistent results or by elicitation resulting in a lack of consensus among panel members.

4 Creating a TokenSpace: TS10

It is difficult to exemplify the TokenSpaces without wholesale reproducing the taxonomies, scoring outcomes and visual depictions themselves so discussion will be largely limited to the process and outcomes.

4.1 Iterative Construction of Taxonomies, Indices & Score Modifiers

TS10 Taxonomy Development Iterations:

1) Progression from intuitively reasoned shortlists to categorical & indexed dimensions

2) Assigned unstandardised characteristic score modifiers (weightings incorporated), reduced number of dimensions, some categorical dimensions consolidated into index form

3) Standardised characteristic score modifiers to separately apply weightings, further reduction of dimensions, collapsing some categorical dimensions further into indices for ease of application — at possible expense of increased subjectivity

THIS IS THE BIG TOKENSPACE TRADEOFF — MITIGATING “GOODHART MANIPULABILITY” AT THE EXPENSE OF SUBJECTIVE REASONING. TRADEOFF CAN BE TUNED BY BALANCING CATEGORICAL AND INDEXED DIMENSIONS.

4.2 Placing Assets in TS10

Taxonomies and weighting modifiers used to construct a TokenSpace with top 10 marketcap assets.

4.3 Cluster Analysis & Correlations

Clustering, statistical distribution and correlations studied. Clusters mapping to sub-populations or “species” of assets such as PoW moneys, federated systems, ICO “smart contract” platforms, ETH, USDT, BTC occupying unique space somewhat.

4.4 TSL7: Using TokenSpace to Compare Cryptographic & Legacy Assets

This case study exemplifies why maximising the range of meta-characteristic scores within the population of objects, in that no cryptographic assets are particularly good moneys yet, especially in comparison to legacy assets such as USD and CHF. Benchmarking and normalisation are very valuable tools and have been used heavily in the construction

4.5 TSTDX: Time-Dependence of Selected Assets

A number of interesting observations can be made from this case study, monetary metals are decreasing in Moneyness with time as Bitcoin’s increases — ostensibly as the digitalisation of human society corresponds to favouring similarly digital (“simulacrised”) money such as Bitcoin over specie. In this respect, silver is some way ahead of gold, being largely a commodity rather than a commodity-money in the present day. The loss of gold and silver backing on moneys such as the British Pound (GBP) and the US Dollar (USD) leading to loss of Commodityness, Moneyness and an increase in Securityness may also be rationalised as derealisation — a loss of mimetic gravitas in addition to simulacrum-related societal sentiment.

The time-dependence of cryptographic assets generally shows a trend of decreasing Securityness as the networks mature and assets become more adopted, distributed, widely held, useful and used. In concert Moneyness and Commodityness also tend to increase as more reasons to use, hold and transact with the assets emerge. Ethereum (ETH) is particularly remarkable as — in tandem with Hinman’s summer 2018 sentiments, what started as a securities offering of a centralised asset reliant on the efforts of a team of others for speculative gain has become (to some extent) more widely used, useful, held and distributed hence leading to a decrease in Securityness and increases in Moneyness and Commodityness. It could perhaps be said that Ethereum in particular is well on the path to desecuritisation, or indeed may have arrived at that destination depending on where boundaries are perceived to lie in TokenSpace. The US Dollar (USD) still possesses a strong Moneyness being the de facto world reserve currency, though its Moneyness and Commodityness have been declining since the abandonment of gold-backing and the rise of the petrodollar system.

5 Discussion & Considerations Informing Further Development of TokenSpace

Decentralisation theatre upsides / downsides

Often token issuers engineer their assets to have a perceived value proposition by apportioning future cashflows to the holders, via mechanisms such as token burns, staking or masternodes which are coarsely analogous to share buybacks but with critical and significant differences to the downside. Likewise masternodes, staking rewards or issuer-sanctioned airdrops map coarsely to dividends in legacy finance. However, if a token is deemed to be too security-like then exchanges may be reluctant to list for fear of future liability or compliance issues.

Limitations of TokenSpace

It is important to explicitly discuss the limitations of TokenSpace. For the purposes of a subjective classification system such as TokenSpace, as many attributes of cryptographic networks and assets are continuous, exhibit subtle variations and / or edge cases, a mixture of categorical and numerical discrimination is most likely the optimal approach. Therefore, the instantiations of TokenSpace which will demonstrate the most explanatory power will be hybrids of traditional and phenetic taxonomy types. This design choice is justified by the desired outcome of numerical scores as the output of the classification execution in order to populate asset locations in the Euclidean 3D space that TokenSpace creates. Conversely in the interests of pragmatism, a great deal of insight may still be derived from a primarily categorical classification approach with some range-bound indices and if this meets the needs of the user then it is an acceptable and valid design choice. Further it minimises over-reliance on measurable attributes which may be subject to manipulation for motivations related to decentralisation theatre.

Looking in the mirror

As with all information systems, the principle of GIGO (Garbage In, Garbage Out) applies. A number of potential pitfalls are as follows, and the author does not exclude oneself from susceptibility to any or all of these. The use of misinformed judgement, lack of methodological rigour in taxonomy construction, over-estimation of the researcher’s knowledge of the field or competence in applying taxonomic methodology, latent biases, poor quality / misleading data sources or judgements and a lack of appreciation of edge cases or category overlap may severely limit the usefulness of the TokenSpace produced and therefore its explanatory power. It must be reiterated yet again that TokenSpace affords a subjective conceptual framework for the comparative analysis of assets. The meta-characteristic definitions and choices, dimensions, categories and characteristics employed, score modifiers and / or weightings are all subjective and depend on the choices of the researcher which derive from intended purpose. It is entirely realistic that an asset issuer may tailor their taxonomies, score modifiers, regulatory boundary functions or a combination of the above to present a favourable assessment with respect to their biases or motivations.

Changing goalposts

Additionally, considering the changing nature of regulatory and compliance landscape may have a large bearing on what can be considered to be acceptable asset characteristics in compliance terms and may necessitate a re-evaluation of weightings and / or score modifiers. Some distinction between “good” and “bad” securities, moneys or commodities in an area of particular interest is a worthwhile area to explore, as it potentially extends the explanatory power of the framework if a meaningfulness-preserving approach to occupying a region between -1 and +1 could provide a coarse mechanism to do this, though the way that dimension scores and weightings are determined would have to be adjusted and naive methods such as taking moduli do not sufficiently discriminate as to the quality of an asset, nor would they easily be integrated into the weighted taxonomy approach — though individual dimensions could be assigned negative weightings rather than inverted dimension scores.

Future directions

Future planned developments include the construction of TokenSpaces with higher dimensionality and alternative taxonomies for different meta-characteristics intended for purposes other than increasing regulatory clarification. Scoring mechanisms including categorical and indexed dimensions, score modifiers and weightings may also be further refined and extended. Other approaches to generating asset coordinates for TokenSpaces will also be explored, with plans in place to form “digital round tables” with broad subsets of stakeholders to arrive at asset scores or ranges. Work is underway with collaborators to extend TokenSpace into DAOSpace in order to characterise similarities and differences of “Decentralised Autonomous Organisations” as opposed to assets. One interesting nexus of DAOSpace and TokenSpace is attempting to disentangle the design choices and properties of decentralised organisations (and their native assets) with respect to Securityness in particular. The SEC has already made it clear that TheDAO tokens (DAO) would be classified as securities and therefore profit-oriented tokenised DAOs must be designed carefully with this in mind should they intend to be compliant with existing regulations. Interestingly Malta has passed laws giving DAOs legal personality, meaning that another cycle of jurisdictional arbitrage may be underway, this time with organisations as well as or instead of assets. Likewise stablecoins with certain properties especially related to asset issuance may also be problematic from a compliance perspective so a potential extension of this work towards a StablecoinSpace classification framework for pegged assets is an avenue being explored currently.

A future goal of TokenSpace is the development of an environment which may be updated in real-time from various information feeds from market, semantic / linguistic and / or network data in order to provide dynamic information as to evolving asset characteristics as well as historical trends at varying points in time. This may facilitate the goal of descriptive, explanatory and even predictive techniques for understanding, rationalising or foreseeing trends, issues and opportunities relating to assets and networks before they become readily apparent from naıve analysis.

Want moar? Take a look at various TokenSpace materials to go deeper: Primer, Q&A, Manuscript (available on request), Lecture video (soon!).

Wassim Alsindi previously directed research at independent laboratory Parallel Industries, analysing cryptocurrency networks from data-driven and human perspectives. Parallel Industries activities and resources are archived at www.pllel.com and @parallelind on Twitter.

Wassim is now working on a new journal and conference series for cryptocurrency & blockchain technology research in a collaborative endeavour between the Digital Currency Initiative at the MIT Media Lab and the MIT Press. Our goal is to bring together the technical fields of cryptography, protocol engineering and distributed systems research with epistemic, phenomenological and ontological insights from the domains of economics, law, complex systems and philosophy to crystallise a mature scholarly ecosystem. Find him at @wassimalsindi on Twitter.

Fork-governance of cryptocurrencies and decentralised networks examined

[Note: This text written in January 2019 follows on from “Towards an Analytical Discipline of Forkonomy” and “Forkonomy Revisited” and was first included in the Decentralised Thriving Anthology]

Fork your mother if you want fork!

— Jihan Who (@JihanWho) 19 January 2019

First, some definitions.

Forks:

In open source software, project codebase forks are commonplace and occur when existing software development paths diverge, creating separate and distinct pieces of software. Torvalds’ original Linux kernel from 1991 has been forked into countless descendant projects. In the case of blockchain-based cryptocurrency networks implementing ledgers, there exists the prospect of both codebase and ledger forks. A cryptocurrency codebaseforkcreates an independent project to be launched with a new genesis block which may share consensus rules but with an entirely different transaction history than its progenitor — e.g. BTC and LTC. A ledger fork creates a separate incompatible network, sharing its history with the progenitor network until the divergent event, commonly referred to as a chain split — e.g. BTC and BCH.

3/ Fork terminology is tricky and sometimes muddled. As the concept came to cryptocurrency from OSS development many of the terms have been co-opted but there are further nuances here: particularly to distinguish between codebase / ledger forks, and hard / soft / velvet forks. pic.twitter.com/fh8RLjkAIk

— Parallel Industries ꙮ ? ? (@parallelind) 8 October 2018

Consensus rule changes or alteration of the network transaction history may be the cause of such a fracture, deliberate or unplanned. Often when networks upgrade software, consensus rules or implement new features a portion of the network participants may be left behind on a vestigial timeline that lacks developer, community, wallet or exchange support. In the summer of 2018 a fifth of nodes running Bitcoin Cash (BCH) — a minority ledger fork of BTC with significantly relaxed block size limitations — were separated from the BCH network and a non-trivial number of would-be nodes remained disconnected from the BCH network weeks later.

Governance: Decision making process between multiple parties.

Blockchain governance: Decision-making process by mutually distrusting entities in a multi-party distributed system.

On/off-chain governance (aka governance by / of the network): Decisions are made either explicitly through the network’s ledger and UTXOs/balances possessed therein, or via some other (in)formal mechanism such as rough or social consensus.

Read these articles collated by CleanApp for a detailed discussion of key considerations in formally governed blockchain networks.

Immutability: An attribute primarily observed at the protocol layer in the decentralised networking stack — upon which the monetary layer depends for persistence — ensuring the inability of stakeholders or adversaries to alter the transaction record and thereby balances. With this in mind, the oft-quoted concept of ‘code is law’ which refers to immutability in cryptocurrency networks, typically referring more to preserving the intended use and function of a system and its ledger rather than a blind adherence to a software implementation regardless of flaws or vulnerabilities.

What Maketh a Fork?

The distinction between what constitutes a vestigial network and a viable breakaway faction is unclear and difficult to objectively parameterise. There is a significant element of adversarial strategy, political gamesmanship and public signalling of (real or synthetic) intent and support via social media platforms. The notions of critical mass and stakeholder buy-in are ostensibly at play since ecosystem fragmentations would be characterised as strongly negative sum through the invocation of Metcalfe’s Law as regards network effects and hence value proposition. Any blockchain secured thermodynamically by Proof-of-Work (PoW) is susceptible to attack vectors such as so-called 51 % or majority attacks, leading to re-orgs (chain re-organisations) as multiple candidates satisfying chain selection rules emerge. These can result in the potential for double-spending the same funds more than once against entities such as exchanges who do not require sufficient confirmations for transaction finality to be reliable in an adversarial context. Should a network fragment into multiple disconnected populations, adversaries with control of much less significant computational resource would be in reach of majority hashrate either using permanent or rented computation from sources such as Nicehash or Amazon EC3.

4/ What ingredients are needed to sustain a “minority” network fragment such as $ETC? For a PoW network, it’s going to need significant hashrate to avoid replay attacks, re-orgs & wipeout risks – especially if #codeislaw & immutability is respected. Devs, users & businesses too. pic.twitter.com/G63TJHT4VP

— Parallel Industries ꙮ ? ? (@parallelind) 8 October 2018

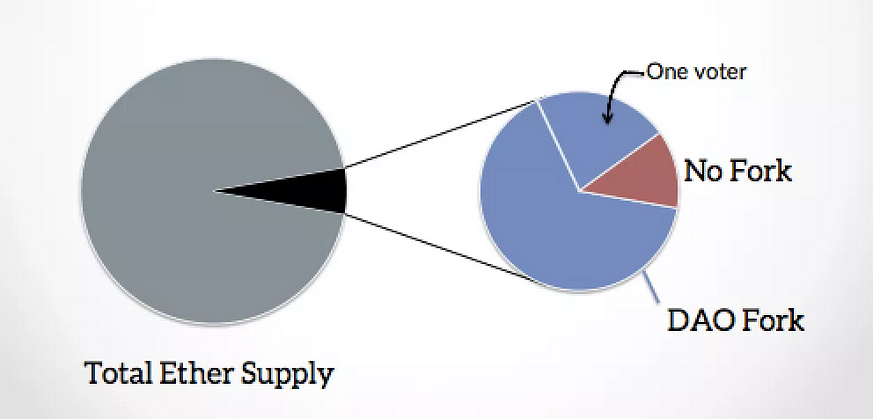

A striking example of this was the divergence of the Ethereum developer and leadership cadre (ETH) from the canonical account-oriented Ethereum blockchain (ETC) due to the exploitation of a flawed smart contract project resembling a quasi-securitised decentralised investment fund known as The DAO (Decentralised Autonomous Organisation). In this case the Ethereum insiders decided to sacrifice immutability and by extension censorship-resistance in order to conduct an effective bailout of DAO participants which came to exercise Too-Big-To-Fail influence over the overall Ethereum network, insider asset holdings, token supply and mindshare. A social media consultation process in conjunction with on-chain voting was employed to arrive at this conclusion though both methods are known to be flawed and gameable. During the irregular state transition process akin to a rollback, a co-ordinated effort between miners, exchanges and developers took place on private channels, exposing the degree of centralisation inherent in the power structures of constituent network participants.

The key event which transformed the canonical Ethereum blockchain (where the DAO attacker kept their spoils) from a vestigial wiped out chain to a viable if contentious minority fork was the decision by Bitsquare and Poloniex exchanges to list the attacker’s timeline as Ethereum Classic (ETC) alongside high-profile mining participants such as Chandler Guo, well resourced financial organisations such as Grayscale Invest (a subsidiary of Digital Currency Group) and former development team members such as Charles Hoskinson to publically declare and deploy support, developers and significant hashrate to defend the original Ethereum network. ETC now exists as an independent and sovereign network with diverging priorities, characteristics and goals to the larger Ethereum network ETH.

Forks and network governance: the case of Bitcoin and SegWit (excerpt from Forkonomy paper)

For a range of reasons, there is often strident resistance to hard forks — irreversible protocol upgrades or relaxing of the existing consensus ruleset — in “ungoverned” trust-minimised cryptocurrency networks such as BTC. The lack of controlling entities may lead to a chain split and lasting network partition if the delicate balance of stakeholder incentives fails in the presence of a divergent event. The implementation of SegWit (Segregated Witness) by the BTC network was eventually achieved in 2017 as a backward-compatible soft fork following several years of intense political and strategic maneuvering by the constituent stakeholders in the BTC network. This off-chain governance process of emergent consensus requiring supermajority or unanimity measured by miner signalling has proven to be an inefficient and gameable mechanism for administering the BTC network. Certain stakeholder constituencies such as the developers maintaining the reference Bitcoin Core software client implementation of BTC could not easily reach agreement with mining oligopolists and so-called big block advocates over the optimum technological trajectory for the BTC network.

Major stakeholders of the mining constituency strongly opposed SegWit as it would render a previously clandestine proprietary efficiency advantage known as covert ASICBoost ineffective on the canonical BTC chain. A grassroots BTC community movement campaigning for a User Activated Soft Fork (UASF) for SegWit implementation and a face-saving Bitcoin Improvement Proposal (BIP91) facilitated the eventual lock-in of the SegWit upgrade in the summer of 2017. A contentious network partition took place in August 2017, giving rise to the Bitcoin Cash (BCH) network which rejected SegWit and opted instead for linear on-chain scaling. By changing the block size and loosening the consensus ruleset without overwhelming agreement from all constituencies of the BTC network, it is difficult to find a basis for BCH proponents’ claims to be the canonical Bitcoin blockchain without invoking appeals to emotion, authority or other logical fallacies. The continuing presence of Craig S. Wright and his claims to be a progenitor of Bitcoin are an example of these attempts at legitimacy.

What the #forkgov? Fork-resistance and governance

Given the significant downside potential of real and perceived threats to the resilience and legitimacy of a fragmenting network and loss of associated network effects, the ability of a blockchain-based protocol network to demonstrate fork resistance provides significant strength to its value proposition and two notable examples of networks attempting to utilise such a mechanism are Tezos and Decred. Decred is an example of a hybrid PoW/PoS monetary network which is implementing a proposal and governance mechanism termed Politeia. Since coin-holders have voting rights based on stake weight, they have the ability to keep miners and developer constituencies honest through the mechanism to reach decisions by majority stakeholder consensus on matters including hard forks. These lessons were ostensibly learned through the developer team’s experiences in writing a BTC client which they felt was not appraised objectively by the Bitcoin Core developer ecosystem. Decred’s fork resistance is effectively achieved by the fact that most stakeholders would be non-voting on a minority chain, it would remain stalled as blocks would not be created or propagated across the upstart network.

Taking a high-level perspective, let’s address the most general question: are these two notions meaningfully compatible? If we think of any natural process in the Universe — from the celestial to the tribal — as accretions and communities grow in size and complexity, scalability challenges increase markedly. Minimising accidental chain splits during protocol upgrades is a worthy goal. However, denying a mechanism to allow factions a graceful and orderly exit has upsides in preserving the moat of network effect but at the cost of internal dissonance, which may grow over time and lead to second-order shenanigans. Sound familiar?

BrexitCoin combines Proof-of-Flag governance and historical obfuscation so that mistakes are guaranteed to be repeated. Unelected leaders of BrexitCoin committee must wait for a scandal to occur before the next “democracyblock” may be proposed.https://t.co/cNpICRxHCI pic.twitter.com/dsgYr09UG5

— Byzantine Business School (@BusinessSch001) 4 June 2018

One can look at ledger forks in a few different ways as good, bad or neutral:

[Good] A/B/…/Z testing of different technical, economic or philosophical approaches aka “Let the market decide, fork freedom baby!”

[Bad] Deleterious to network effects of nascent currency protocols with respect to Metcalfe or (IMO much more relevant) transactome-informed network capital theory as discussed by Gogerty.

[Neutral] An inevitability of entropy and/or finite social scalability as these networks grow and mature it is not realistic to keep all stakeholders sufficiently aligned for optimal network health.

As such, protocol layer fork resistance and effective public fora with voting mechanisms can certainly be helpful tools, but there is a question as to whether democracy (the tyranny of the majority) should be exercised in all cases. If there was a “block size” style civil war in Tezos or Decred with no acceptable compromise in sight, would the status quo still be the best situation in all cases?

Worth noting that I predicted the first Tezos hard fork over a year ago (@tzlibre)?.

— Philip Daian (@phildaian) 4 October 2018

There is *nothing* that supercedes fork-based governance for cryptocurrencies, and that is a good thing. https://t.co/E0jRomnvqi

My perspective is that fork-resistance will largely redistribute the manifestations of discontent rather than provide a lasting cure to ills, and the native network governance mechanisms may be gamed by either incumbents or ousters. More time is needed to see how decision-making regarding technical evolution unfolds in both networks. Decred seems to be sitting pretty with a fairly attack-resilient hybrid PoW/PoS system, but there are some “exclusionary forces” in the network leading to the escalating DCR-denominated costs of staking tickets necessary to receive PoS rewards and participate in proposal voting, denying access to the mechanism to smaller holders.

Demand for tickets and staking rewards naturally increases with ongoing issuance, as the widening pool of coin holders wanting to mitigate dilution also does. As the ticket price is dynamic and demand-responsive, it creates upwards pressure which would make tickets inaccessible for a growing proportion of coin holders. At time of writing, “ticket splitting” allowing smaller holders to engage in PoS is available from some stake pools and self-organised collectives but the process is not yet automated in reference clients. On the other hand, the ongoing bear market has seen the USD ticket price fall from ~$8–10k USD at January and May 2018 peaks to ~$2k USD today in late January 2019 so those entering Decred with capital from outside the cryptocurrency domain would likely be undeterred. Data from dcrdata.org and coincap.io.

Further, as per Parallel Industries’ TokenSpace taxonomy research, staking rewards resemble dividends and token-based governance privileges resemble shareholder rights which make Decred appear a little closer to the traditional definition of a capital asset than pure PoW systems. This may or may not be an issue depending how regulation unfolds. Tezos has those potential issues plus the regulatory risk from the token sale. Decred’s airdrop may not have distributed the coin as fairly as possible but will undoubtedly attract a lower compliance burden than a token sale or premine.

“Activist Forks” & “Unfounder Forks”

Can you appreciate the technology utility but dislike the economic, human and compliance issues packaged together with a project?

Taking this a step further, these dissonant groups may conduct a guerilla campaign inside a network to focus attention on their cause. Last summer, a few anti-KYC factions of Tezos had appeared on social media outlets prior to network launch, however since the mainnet launch things have quietened down somewhat. One faction which still apparently intends to create a fork of Tezos changed tact and became a delegated staker within the network whilst continuing to voice dissent — perhaps this “fork activism” can be interpreted as a response to the “fork-resistance” of Tezos.

No-one is being fooled by high-friction Futility tokens. https://t.co/NHikROquE8

— Parallel Industries ꙮ ? ? (@parallelind) 2 November 2018

So, what else could a fork activist do? Take a look around at the ongoing ICO bonfire of the vanities which is largely due to poorly thought out sales of high-friction futility tokens infringing upon / attempting to circumvent various regulations around the world. The prospect of removing the token issuers and the tokens themselves once treasuries are liquidated (by themselves, or by lawmakers) and development ceases is quite attractive indeed — will we see a wave of “unfounder forks” as in this example? Perhaps operating in reverse to Simon de la Rouviere’s “Tokenised Forking” where both tokens and founders are excised.

Been thinking about this, started already and will only happen more from here – “activist” or “unfounder” forks if you will.

— Parallel Industries ꙮ ? ? (@parallelind) 20 January 2019

Let the ICO vendors bear legal risk & develop, then remove the token friction & promoters upon launch/runway expiry.#forkonomyhttps://t.co/7LpZvpbQhc

Wassim’s “What The #ForkGov” article exploring fork-based governance of decentralised networks has been included in DAOStack‘s “Decentralised Thriving” Anthology. Download it from here.

ICYMI on In The Mesh, read the next parts there first.

This article is the third in a four-part series by Matt ฿ (@MattoshiN) and Wassim Alsindi (@parallelind) on the use of Bitcoin and the technology stack built atop it to assist those living under oppressive regimes or in conflict zones, and those seeking to flee them. Read the first and second instalments.

Bitcoin is, above all, agnostic. It serves anything, and anyone, with no regard for who users are or what their intents might be, provided they play by the rules — rules, not rulers. What one may see in the network, protocol and currency is a context-dependent Rorschach test: one person’s rat poison is another’s meal ticket. While legacy financial institutions are fuelling a wave of social media deplatformings through the ever-expanding Operation Chokepoint, Bitcoin rises to prominence as a tool for the marginalised, ostracised, oppressed and forgotten. It enables any human to develop a parallel means to transact and store wealth and, as time goes on, the ways and means of using Bitcoin grow in variety and quality. There is no doubt that volatility in BTC-fiat crossrates make external measures of cryptocurrency value vary wildly, and obviously downside risk is not helpful especially when you are putting your life on the line. On the other hand, when national currencies undergo hyperinflationary events Bitcoin can be one of few accessible havens of relative stability. As of today, stablecoins are not the answer.

Freedom means everyone can use it, regardless of your opinion on their motivations, political leanings or priorities. Guerrilla and outsider organisations of all flavours and persuasions will be early adopters of decentralised technologies, and there’s nothing that can be done about that. The precautionary principle doesn’t work in permissionless environs and there is no ‘off switch’ — a feature, not a bug.

Bitcoin heralds a new age of ‘extreme ownership’ — or at least, provides the option for individuals to truly exercise sovereignty over their wealth. When used correctly, it is both unseizable and uncensorable. In the digital age, few things are more important than ensuring that wealth can be stored and transmitted without custodians or other third parties keeping personally identifiable information, blacklisting recipients or otherwise denying/reversing transactions. While physical cash offers individuals a degree of anonymity in their day-to-day exchanges, the push towards digital payments threatens this privacy by creating digital footprints that could be exploited for the purposes of surveillance.

How an individual ‘experiences’ Bitcoin is entirely up to them. On one end of the spectrum are those who have no need for true possession — consider speculators that rely on custodial exchanges or wallets. On the other are power users seeking granular control for maximising their privacy and financial self-sovereignty — functions like coin control, UTXO mixing or operating a fully validating node. Evidently, the further towards this end of the spectrum they tend, the more the value proposition of Bitcoin becomes apparent.

The appeal of Bitcoin today is undoubtedly rooted in the ease of its trust-minimised, rapid and global transfer, paired with the change-resistance and (algorithmically enforced) scarcity that precious metals have historically exhibited. Where faith in centrally-issued fiat currencies requires that participants entrust governments with maintaining monetary legitimacy and purchasing power, faith in a cryptocurrency network’s continued healthy function merely requires that participants act in their own self-interest — consensus is driven by active nodes. Indeed, you’ll have a hard time garnering support for an upgrade that would endanger the wealth of others such as inflating the money supply or sacrificing security for convenience. However, no system is infallible, and it’s foolhardy to overlook some potentially dangerous attack vectors executable in various manners. Everything from eclipse attacks — which geographically or otherwise target individual or grouped subsets of nodes so as to obscure and alter their view of the canonical blockchain — to state-sponsored 51% attacks and mass deanonymisation efforts which could vastly undermine the security and credibility of the network.

Fungibility and privacy are linked concepts — an asset’s fungibility preserves the privacy of the individual holding it. Assets such as gold and fiat cash are considered highly fungible, as it’s near impossible to distinguish between units of the same type. Conversely, something like a rare painting would be non-fungible, on account of its uniqueness. Functionally — for the most part — Bitcoin appears to be fungible: the vast majority of merchants will indiscriminately accept payments regardless of the provenance of coins.

Upon closer examination however, the situation is less rosy. As the protocol relies on a public ledger to keep track of the movement of funds, this provides a rich source of information for the intrepid data miner looking to perform analyses and potentially deanonymise users. “Blockchain analytics” companies (and their governmental clientele) have been known to track the propagation of UTXOs through the network that have passed through a given address or that have interacted with ‘blacklisted’ entities.

There’s an entire class of coins which offer varying degrees of privacy within their protocols and address a niche that Bitcoin inherently lacks. In life-and-death situations, linking a BTC transaction or an address to a real world identity can have grave consequences in locations where authorities are hostile. On the other hand, if Bitcoin was as private as Monero or Zcash, then its monetary soundness would be dependent on cryptographic assumptions holding true. An example of such a situation is the recently disclosed vulnerability in Zcash which arose from cryptographic errors which — although complex to exploit — would have allowed an adversary to surreptitiously inflate the supply in the secret “shielded pool”.

Despite the transparent nature of Bitcoin’s ledger, it can be used privately. Whilst the protocol doesn’t incorporate strong guarantees itself at present, this is set to change with the implementation of improvements such as Confidential Transactions, MAST, Taproot and Schnorr signatures. Externally coordinated obfuscation techniques are in use today, most commonly CoinJoin implementations such as JoinMarket and ZeroLink. These allow users to pool and jointly transact multiple inputs so that a degree of plausible deniability is assured, as observers cannot map outputs to specific inputs.

Recent development of more sophisticated CoinJoin transaction types such as Pay-to-Endpoint (also known as PayJoin/Stowaway) and Ricochet, have proven the shortcomings of chain analytics capabilities as they are understood today. One cautionary note is that although we have many separate techniques for improving Bitcoin transaction privacy, interactions between these elements are not necessarily widely understood. As a result, there are non-zero probabilities of critical information leakage or failure of certain processes and users should not assume that all tools have been tested thoroughly in combination. For example sending mixed UTXOs from a CoinJoin wallet into a Lightning node may lead to deanonymisation given that Lightning node IDs are public.

Since the Bitcoin protocol has displayed such admirable resilience and uptime in the past 10 years, authorities at the local, regional, national or global scales can only try to apply pressure to the “soft” interfaces between the network and the wider world such as exchanges, merchants, miners, hardware and software vendors. Inconsistent laws arising from governments’ knee-jerk reactions towards Bitcoin are an ongoing reality.

Ensuring regulators are in possession of independent tools and information sources will minimise misunderstandings leading to arbitrary bans, restrictions, licenses, fines, jail or seizure. Even upstream infrastructure such as ISPs, domain registrars and payment intermediaries are coming under increasing pressure. One aspect of particular concern is the conflation of Bitcoin with tokens, ICOs or other blockchain projects raising funds via regulatory arbitrage. China now apparently requires the registration of cryptocurrency nodes with authorities. Where persons or businesses operating cryptocurrency enterprises are kept under close watch by corrupt officials, they are at risk of extortion or kidnap.

Another front on which there is work to be done is on the fungibility of bitcoin UTXOs themselves. As mentioned above, there is a growing industrial niche providing analytical services to governments and businesses submitting to state compliance procedures. Though they may oversell their capabilities to clients, it is known that exchanges supply information to them. One attempt to deanonymise identifiers on a network such as Bitcoin has involved attempting to use metadata such as browser fingerprinting, language preferences, node and web client IP addresses for location and to link these to particular addresses or UTXOs. Even a small part of the user graph being deanonymised has wider potential implications, due to the public nature of the ledger as discussed above. Know-Your-Customer and Anti-Money Laundering laws (KYC/AML) collectively constitute the greatest privacy risk to individuals using Bitcoin today.

Dusting is also a potential chain analysis technique which takes advantage of poor coin selection in wallets by sending tainted UTXOs to target addresses and tracking their propagation. This vector primarily targets merchants (exchanges and other economic nodes) as individual users can easily circumvent such attacks by marking dust UXTOs as unspendable. The mechanism of transaction itself is also important to recognise in light of the recent OFAC sanction of addresses linked to Iranian nationals. How is any entity going to stop people interacting with sanctioned addresses in a push system?

For the most part, many of the existing issues will become less of an issue over time as the Bitcoin network and the ecosystems built around it mature. The reduction of hashpower aggregation in certain regions such as the West of China makes it increasingly difficult for a malicious (private or state-sanctioned) actor to commandeer dangerous amounts, more skin in the game from cryptocurrency businesses contributing to a state’s GDP and tax coffers makes the budgetary penalty for nations greater should they consider outright bans on cryptocurrencies or adversarial mining and advances in cryptography hardens Bitcoin’s privacy preserving potential.

In the final part of this series the myriad tools, techniques and strategies to transact using Bitcoin in contexts where personal privacy and freedom are under threat will be explored.

Thanks to Yuval Kogman, Alex Gladstein, Richard Myers, Elaine Ou and Adam Gibson for helpful feedback.

Wassim Alsindi directs research at independent laboratory Parallel Industries, analysing cryptocurrency networks from data-driven and human perspectives. Find him at www.pllel.com and @parallelind on Twitter.

Matt B is a writer and content strategist in the cryptocurrency space with a particular interest in Bitcoin and privacy technology. He can be reached at itsmattbit.ch and @MattoshiN on Twitter.

Images by Kevin Durkin for In The Mesh

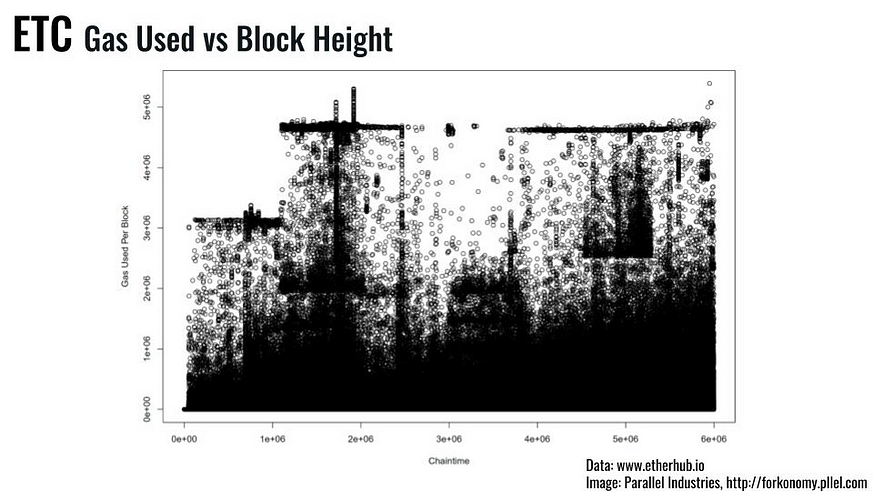

How does anything get done if there are no leaders? Why hasn’t ETC died by being abandoned by the Ethereum Foundation after TheDAO hard fork? The ecosystem of participants and stakeholders working in and around the ETC network is examined in outline below.

Making changes to Ethereum Classic consensus rules is “ungoverned” in a similar way to Bitcoin and Ethereum with little appetite for large numbers of consensus-breaking upgrades. Currently it is an ad hoc process where ECIP proposals are raised on Github, discussed in public/semi-public fora and should they be widely supported without contention locked-in to the nominally canonical “Classic-Geth” client with the other clients (Parity Labs’ eponymous software and IOHK’s Mantis) merging in response. In the case of a contentious proposed upgrade some arbitrary signalling criteria could potentially be set (i.e. % of miners upgrade/signal, on-chain carbon vote as used by ETH to justify DAO hard fork) though this has not occurred in ETC since the events which led to the creation of the network.

As with other networks based on the original Ethereum design, some parameters such as adjustments to the gas limit per block — restricting the amount of EVM computation in a similar way to block size / weight in Bitcoin-derived networks — can be enacted in small increments on a per block basis via miner signalling. There is currently some discussion to motivate a decrease in the gas limit per block in order to avoid the chain growth rate issues which make running ETH full nodes a challenge in terms of burdensome resouce requirements. The likely aggregation of ETC hashrate among a small number of big mining farms, cooperatives and pools presents issues with reliance on miner signalling, as recently evidenced in Bitcoin when the merge-mined EVM Rootstock sidechains went live with 80% of network hashrate signalling. The naive downstream adoption of “default” Ethereum settings such as ETH’s 8 million gas limit per block is also a potential issue for ETC’s ungovernance to navigate.

Two hard fork network upgrades have taken place in the ETC network — ECIP-1010 to remove the “difficulty bomb” and ECIP-1017 to institute a supply cap with asymptotic supply curve.

The decision-making process could be better organised, more transparent and clearly defined and refinements to the ECIP process are currently being discussed. At present most informal community discussion takes place on ETC’s Discord server, with ECIPs themselves posted on the nominated Github account (ethereumclassic) following a power struggle and takeover of the previous canonical Github account (ethereumproject), ostensibly related to the situation with ETCLabs discussed below. ETCLabs appear to be preparing to implement their own proposed parallel “ECLIP” improvement proposal scheme though this may be a mis-communication rather than a “consensus hostage situation” — situation is unclear at time of writing. Below are a few links to recent discussions and proposals relating to how Ethereum Classic reaches decisions relating to network upgrades and changes.

Ethereum Classic (ETC): Putting Together the New Decentralized ECIP Process

Ethereum Classic Improvement Proposals

Some stakeholders in ETC want to see closer collaboration with ETH, some are ambivalent and others are opposed. The recent announcement of Bob Summerwill as ETC Cooperative Executive Director is noteworthy as he was instrumental in founding the Enterprise ETH Alliance, was involved in the Ethereum Foundation, was a senior figure at Consensys. There are some existing collaborative projects between ETH and ETC, including Akomba Labs’ “Peace Bridge” to allow cross-chain transactions, Kotti unified PoA testnet and some recent discussions regarding ETC considering the adoption of aspects of the Ethereum 2.0 roadmap.

The last few months have seen a change in the composition of the ecosystem around Ethereum Classic, as a the previously pre-eminent privately funded core development team “ETCdev” collapsed due to lack of funds with another entity “ETCLabs” forming a new developer team “ETCLabs Core” with significant overlap of personnel. Some community members have described the sequence of events as a corporate takeover attempt, others do not seem so worried.

“The ETC community is still small and, in this bear market, lacks funding from volunteer investors or other sources to initiate new core maintenance and development projects or pay new core developers quickly. This is because there are no leaders, foundations, pre-mines, treasuries, protocol taxation or any other financing gimmicks that so much contaminate other centralized projects.”

The Ethereum blockchain launched on 30th July 2015. When the Ethereum Foundation conducted a hard fork as part of TheDAO’s exploit recovery (“irregular state transition”) on July 20th 2016, they kept the name and ticker symbol Ethereum / ETH. The canonical chain branch in which TheDAO exploiter kept their spoils survived against most observers expectations and attracted community, developer, exchange and mining support. The unforked chain came to be known as Ethereum Classic (ETC).

Ethereum Classic (ETC) is pure Proof of Work utilising the Ethash (Dagger Hashimoto) algorithm. It is the second largest network using this algorithm, marshalling approximately 15–25x times less hashrate than Ethereum (ETH). Due to its situation as a minority PoW network without 51% attack mitigations at the protocol or node levels it has been deemed to be vulnerable to thermodynamic attacks and this has been observed recently. Mining is permissionless so the identities and extent of participation of block producers are not necessarily known. Some network and blockchain analysis of the ETC mining ecosystem is being undertaken currently. There is a high degree of suspicion that covert FPGA and/or ASIC mining was employed leading to the recent majority attacks. Most of the hashrate employed in the recent attacks is suspected to be of exogenous origin to the existing Ethash ecosystem and marketplaces such as Nicehash.

Ethereum’s whitepaper was first circulated in late 2013 and there was a “token crowdfunding” (= ICO) in 2014. Approx 72 million of the 105 million supply issued were distributed in the ICO. Mining providing block and uncle rewards has distributed the remainder. Work is ongoing currently to compare the movement of balances either side of the ETC/ETH fork. Inflation was set to “5M20”, reducing mining rewards by 20% every 5 million blocks which corresponds to approximately 5% annual supply increase. The same hard fork in 2017 (ECIP-1017) also installed a fixed supply cap.

Ethereum “became” Ethereum Classic because the Ethereum Foundation asserted intellectual property rights over the “Ethereum” name despite branching away from the canonical chain. This is still a point of contention and some prefer the name “ETC” as a subset of stakeholders look for alternative nomenclature to “Classic”.

What is the reference node implementation?

This is also a bone of contention in ETC. When ETCdev ceased operation, the hitherto canonical client Classic-Geth written in Golang stopped being reliably maintained. ETCLabs Core maintains Multi-Geth but not all stakeholders in the ETC ecosystem are currently comfortable using their software given their ostensible desire to have an independent ECLIP improvement proposal pathway which appears more hard-fork than soft-fork oriented.

Are there any other full node implementations?

Parity Labs maintains their Parity client written in Rust.

IOHK maintains their Mantis client written in Scala.

How is client development funded?

Development is funded by private organisations — ETCLabs, Parity and IOHK fund client development following the demise of ETCdev. ETC Cooperative (partly funded by DCG/Grayscale and DFG) also support protocol development.

There has been resistance to adopt an on-chain treasury as proposed by IOHK, some stakeholders see this as inherently centralising but given the collapse of ETCdev due to funding shortfalls and absence of alternative funding models / “build it and they will come” the status quo is at risk of prolonging a continuing tragic commons scenario. There are some grants and funding opportunities via ETCLabs but at present are focused on business/startup incubation.

Most funds are controlled by companies but ETC Cooperative is now a 501(c)(3) non profit based in the USA. There is also a small community fund controlled by a multi-signature wallet but there are no current plans to disburse this.

What other software does the entity(ies) which funds the reference node produce?

Hard to answer conclusively since there is a lack of agreement over what the reference implementation currently is.

Parity — Rust ETH client, Polkadot/Substrate, Bitcoin client, Zcash client.

ETCdev — defunct, Emerald application development framework and tools, Orbita sidechains.

ETC Cooperative — developer tooling and infrastructure e.g. recent Google BigQuery integration.

IOHK — a lot of software for Cardano, ZenCash, ETC.

ETCLabs — ?

What else do the entities which develop or fund the reference node do? (not software)

Parity — Web3 Foundation

ETCLabs — VC/Startup incubator

ETC Coop — General PR, community and ecosystem development, conference organisation, enterprise & developer relations

IOHK — PR, summits, art projects (Symphony of Blockchains), academic collaborations, VC partnership and research fellowships with dLab / SoSV / Emurgo….

DCG/Grayscale/CoinDesk — PR, financial instruments e.g. ETC Trust, OTC trading…

How is work other than development (e.g. marketing) funded?

It in unclear how funding and support for non-development activities is apportioned.

DCG/Grayscale and DFG fund ETC Cooperative

DFG funds ETC Labs

Related projects — Are there any significant projects which are related? For example, is this a fork of another project? Have other projects forked this one?

Ethereum (ETH) was a ledger fork of this project, Callisto (CLO) was a ledger fork of this project. There may have been more minor codebase or ledger forks.

Significant Entities and Ecosystem Stakeholders

ETCLabs is a for-profit company with VC/Startup and core development activities funded by DFG, DCG, IOHK and Foxconn.

ETC Cooperative is a 501(c)(3) non profit based in the USA funded by DCG and DFG.

ETCdev (defunct)

IOHK (Input Output Hong Kong) is the company led by Charles Hoskinson who previously worked on BitShares, Ethereum and now Cardano.

DCG (Digital Currency Group) is Barry Silbert’s concern which contains in its orbit Grayscale Investments, CoinDesk, Genesis OTC Trading amongst other organisations.

DFG (Digital Finance Group) is Chinese diversified group concerned with investments in the blockchain and cryptocurrency industry, OTC Trading, Venture Funds.

Wassim Alsindi directs research at independent laboratory Parallel Industries, analysing cryptocurrency networks from data-driven and human perspectives. Find him at www.pllel.com and @parallelind on Twitter.